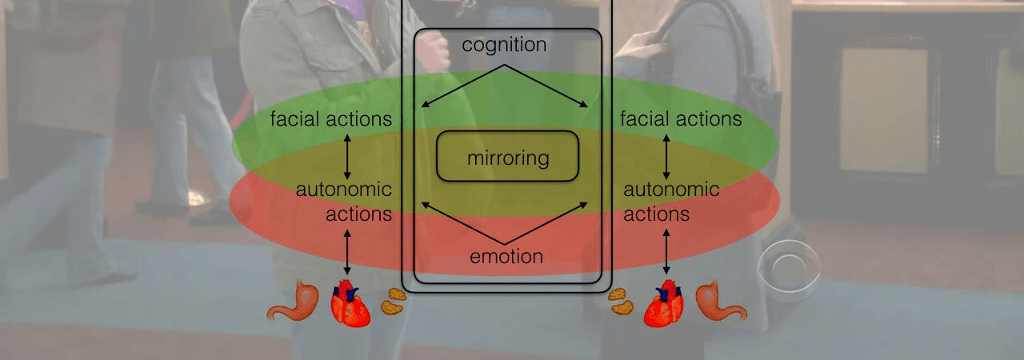

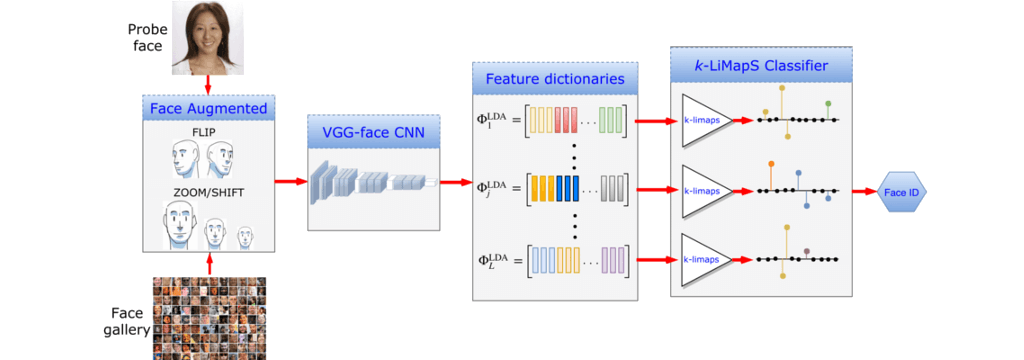

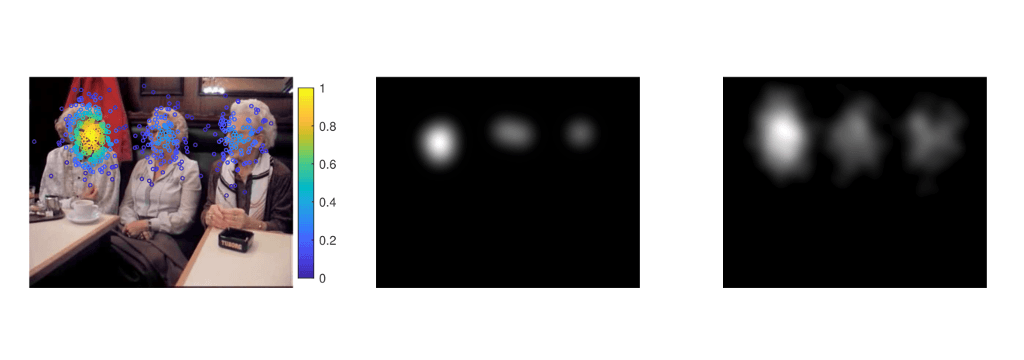

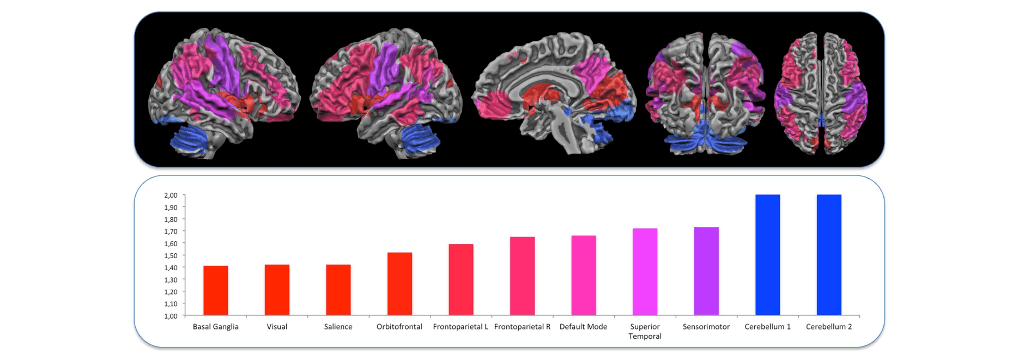

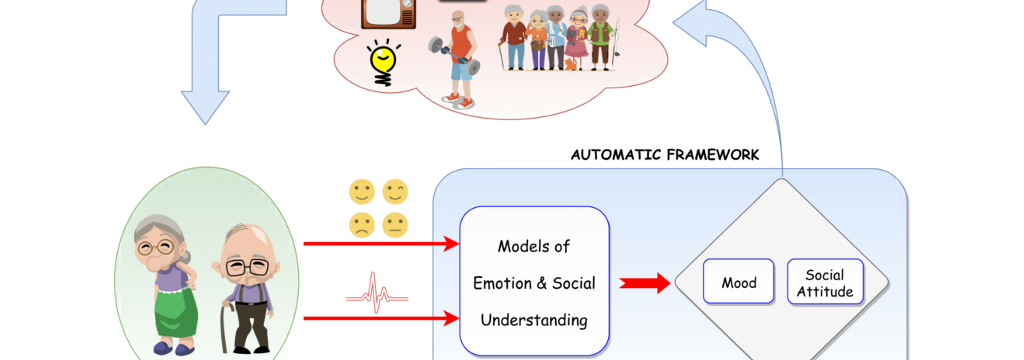

Activities in the PHuSe Lab aim to bridge the gap between the signals gathered by the various modalities employed to sense humans (from physiological signals to perceptual and behavioural cues) and the understanding of such signals so to advance natural interfaces, social interaction, health and wellbeing. Current research concerns modelling and understanding affective expressions, human face identities and cognitive/emotional states and, more generally, nonverbal behaviours such as eye/gaze behaviour and hand/body gestures.

To such end we make use of a variety of sources and signals, from image and videos to depth-sensing (Kinect), physiological signals (EEG, ECG, EDA), eye-tracking data, fMRI and classic clinical/medical modalities. We address several theoretical approaches and tools such as Bayesian graphical modelling and Bayesian Nonparametrics, sparse coding, deep nets, stochastic differential equations and processes. To support this endeavour, we also exploit parallel computing, namely GPU computing and CUDA.