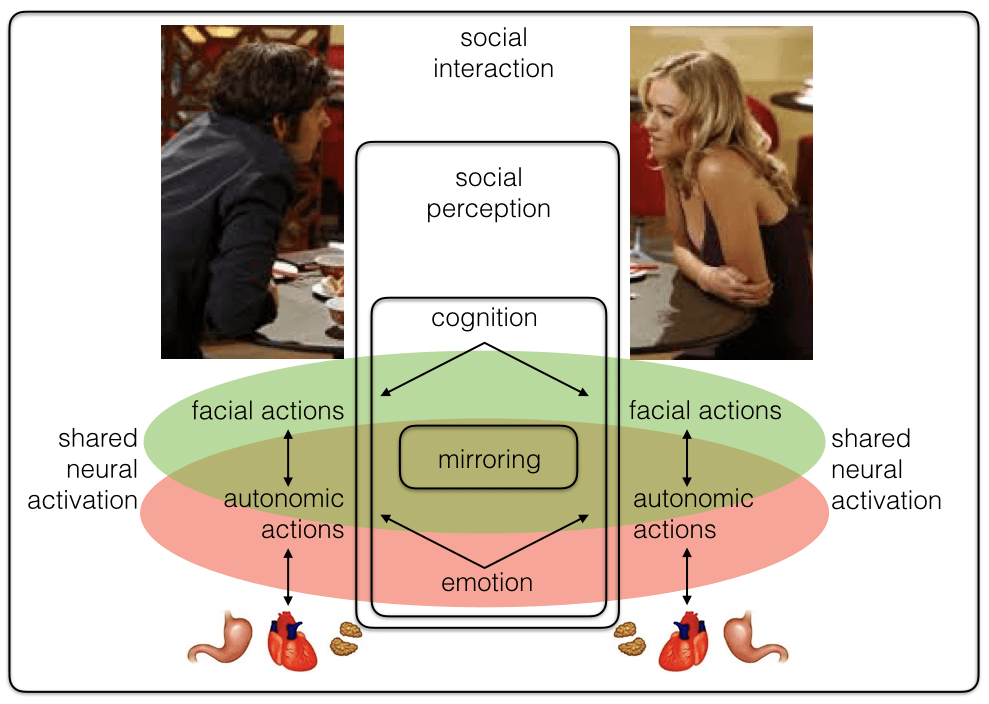

Affect is a key component of nonverbal behaviour. We have considered a simulationist approach to the analysis of facially displayed emotions - e.g., in the course of a face-to-face interaction between an expresser and an observer. The rationale behind the approach lies in the large body of evidence from affective neuroscience showing that when we observe emotional facial expressions, we react with congruent facial mimicry.

At the heart of such perspective is the enactment of the perceived emotion in the observer. Further, in more complex situations, affect understanding is likely to rely on a comprehensive representation grounding the reconstruction of the state of the body associated with the displayed emotion.

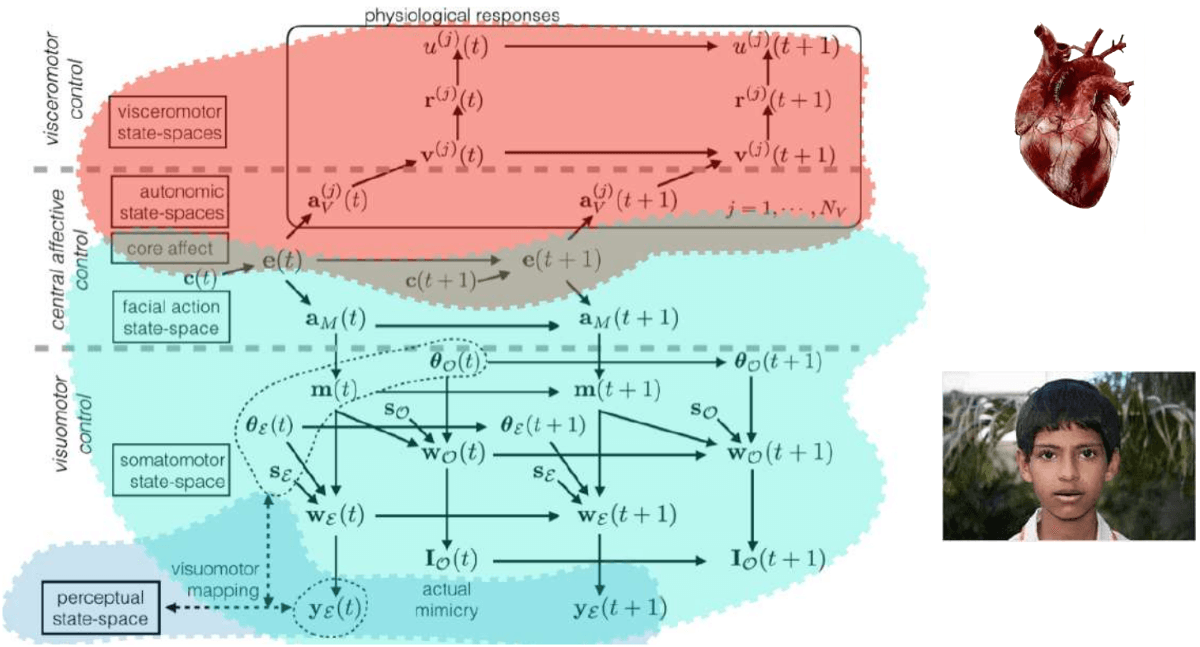

We proposed a novel probabilistic framework based on a deep latent representation of a continuous affect space [1], which can be exploited for both the estimation and the enactment of affective states in a multimodal space (visible facial expressions and physiological signals). We have shown that our approach can address such problems in a unified and principled perspective, thus avoiding ad hoc heuristics while minimising learning efforts.