Beyond visible nonverbal behaviour such as facial expressions and gaze, physiological signals carry important and “honest” information for understanding others’ behaviour. Indeed, it has been shown [1] that they can be suitably exploited for both analysing and simulating core affect states. In that case heart rate variability from ECG and electro-dermal (EDA) signals have been exploited as captured by sensors along the experiment in a classic lab setting.

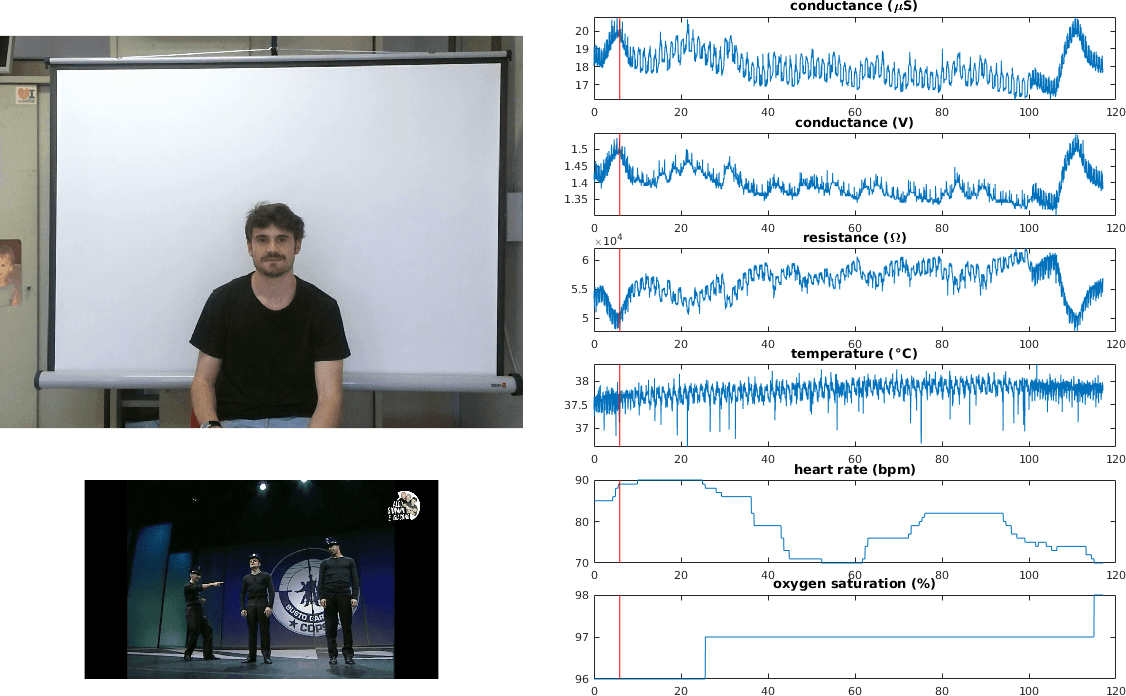

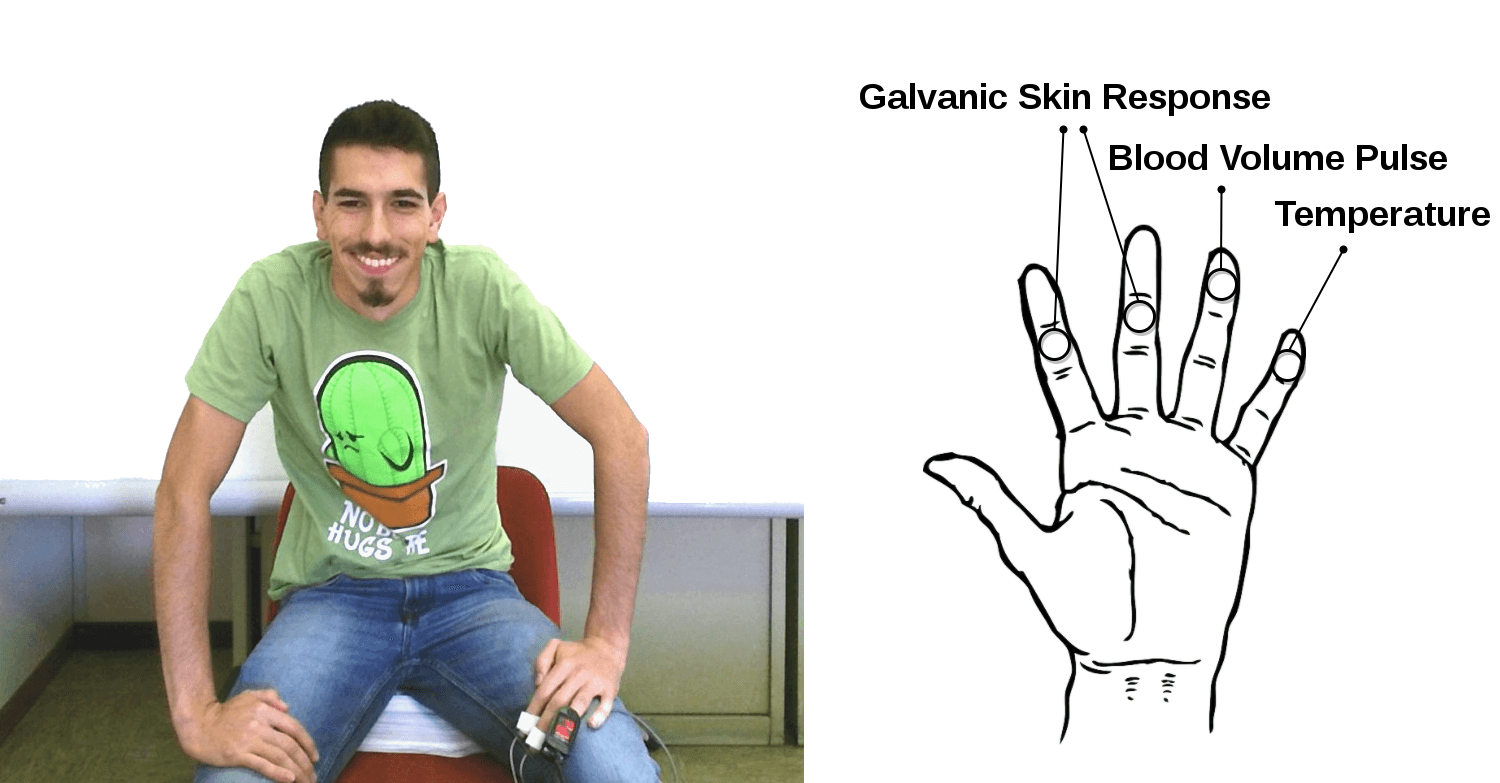

At the same time, to overcome the scarcity of available multimodal datasets concerning with spontaneous emotions, a novel multimodal dataset has been acquired [2] and publicly released as result of an experiment focusing on a positive emotion, namely amusement elicitation. Gathered data include RGB video and depth sequences along with physiological responses (electrodermal activity, blood volume pulse, temperature). In addition, a novel web-based annotation tool was released to provide a suitable tool for continuous affect state modelling and benchmarking http://phuselab.di.unimi.it/resources.php#dataset

Whilst suitable for general modelling purposes, signal acquisition in a lab setting might not always be appropriate and/or suitable. A novel field we are currently investigating concerns remote physiological measurements. This allows, for instance, contactless recording of electromyography (EMG) signals [3]. In particular, we addressed the problem of simulating electromyographic signals arising from muscles involved in facial expressions - markedly those conveying affective information -, by relying solely on facial landmarks detected on video sequences. We have proposed a method that uses the framework of Gaussian Process regression to predict the facial electromyographic signal from videos where people display non-posed affective expressions.